HOLO 3: Pattern Recognition

“In 2020, into 2021, there are ever more frantic discussions of black-boxed AI, ‘light’ sonic warfare through LRADs used at protests, and iterative waves of surveillance flowing in the wake of contact tracing.”

“Debates repeat: about human bias driving machine bias, about the imaginary of the clean dataset. Each day has a reveal of a new fresh horror of algorithmic sabotage.”

Nora N. Khan is a New York-based writer and critic with bylines in Artforum, Flash Art, and Mousse. She steers the 2021 HOLO Annual as Editorial Lead

In taking on the charge for this year’s Annual, I’ve tried to consider what it is one even might want to read in this year, of all years. What do we need to read, about computation, or AI ethics, or art, systems, emergence, experimentation, or technology? Where do we want to find ourselves, at any muddy intersections between fields, as we bridge so many ongoing, devastating crises?

I suspect I might not be alone in saying: it has been immensely, unspeakably difficult this year, to work, to write, and to think clearly and deeply about a single thing. To locate that kernel of interest that may have easily driven one’s writing, thinking, reviewing, in any other year. Such is the impact of grief, collective trauma, and loss: we put our energies into mental survival, not 10,000 word essays about ontology or facial recognition.

And yet many of us have persisted: giving talks, filing essays, publishing books, putting on shows while masked in outdoor venues, projecting work onto buildings, playing raves in empty bunkers, speaking on panels with people we’ll never meet. I have watched my favourite thinkers show up, to deliver moving performance lectures, activating their theory within and through this moment.

There was beauty, still. Watching poets and thinkers show up on screens to read from their work, addressing the moment, mirroring it, demanding more from it, in the middle of so much heartbreak. We watched Wendy Chun speak on “theory as a form of witness.” We watched Fred Moten and Stefano Harney challenge us to abandon the institution, abandon our little titles and little dreams of control. People we read, we continued to think with and alongside them. We tried to find ways to put their ideas into practice in our day to day.

This isn’t to valorize grinding despite crisis, but instead see the work as something done in response to and because of the crushing pressure of neoliberalism, technocracy, and surveillance, as all these forces wreak havoc in the collective. Maybe some of you have found something moving in this effort. I found it emboldening.

Left: New media theorist and Simon Fraiser University Professor Wendy Hui Kyong Chun, director of the Digital Democracies Institute and author of the forthcoming book Discriminating Data.

Right: Critical theorist and Black studies scholar Fred Moten, Professor at New York University and author of In the Break (2003), and other books.

Right: Critical theorist and Black studies scholar Fred Moten, Professor at New York University and author of In the Break (2003), and other books.

Like most begrudging digital serfs, each day I also consumed way more content than I could ever handle. I followed the algorithm and was led by its offerings, trying to make sense of what is offered. As a critic tends to do, I also found myself tracking patterns. Patterns in arguments, patterns in semantic structures, patterns in phrases traded easily, without friction, between headlines and captions.

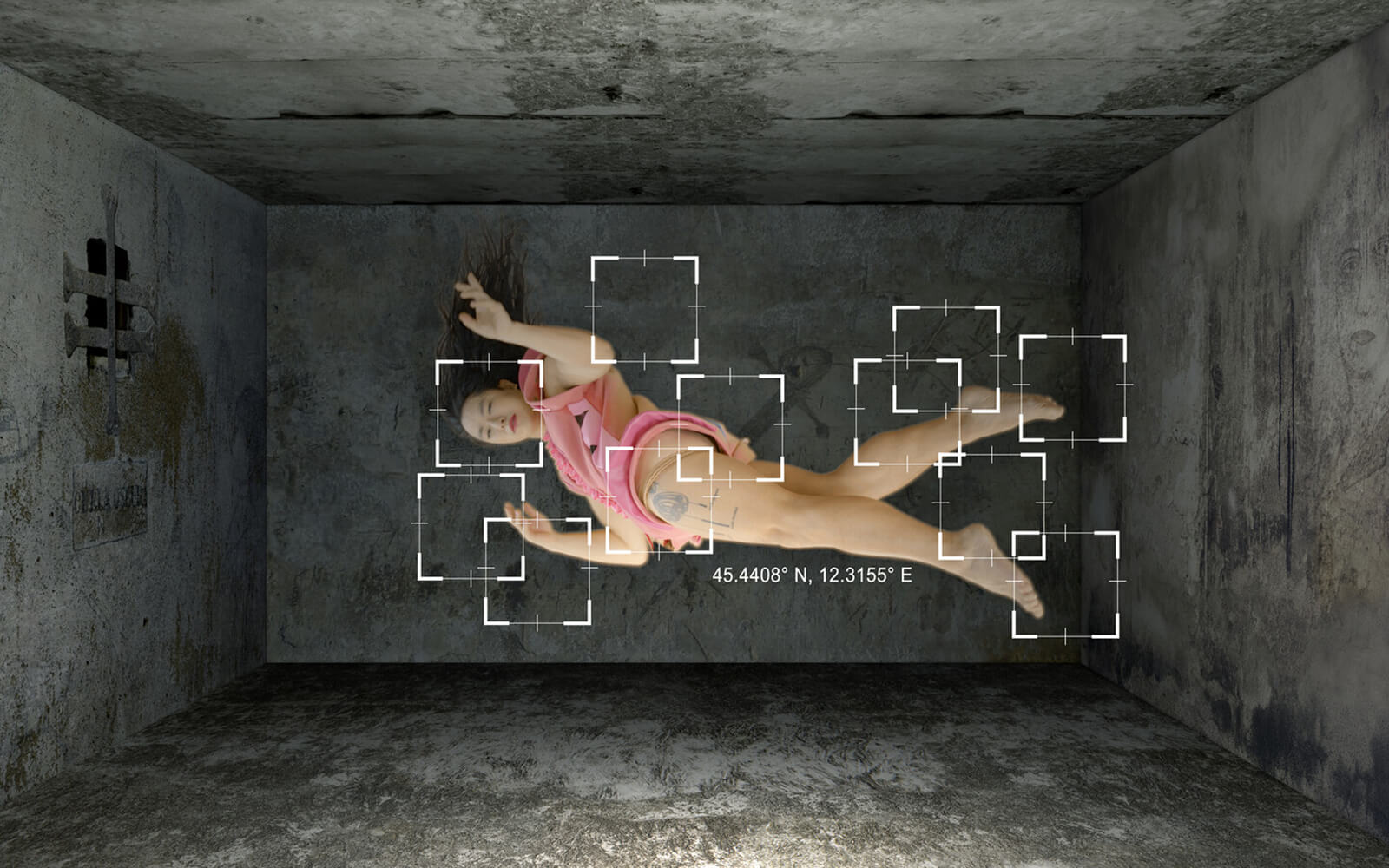

I found myself frustrated with the loops of rhetoric within the technological critiques I’ve made myself for a while now. In 2020, into 2021, there are ever more frantic discussions of black-boxed AI, “light” sonic warfare through LRADs used at protests, and iterative waves of surveillance flowing in the wake of contact tracing. Debates repeat: about human bias driving machine bias, about the imaginary of the clean dataset. Each day has a reveal of a new fresh horror of algorithmic sabotage. Artistic interventions, revealing the violence and inner workings of opaque infrastructure—seemed to end where they began.

It felt more important, this year, to connect our critiques of technology to capitalism, and to understand certain technologies as a direct extension—and expression—of the carceral state. I cracked open Wendy H.K. Chun’s Updating to Remain the Same (2016) and Simone Browne’s Dark Matters (2015) and Jackie Wang’s Carceral Capitalism (2018) again, and kept them open on my desk most of the year. When I read of both police violence and hiring choices alike offloaded onto predictive algorithms, I open Safiya Noble’s Algorithms of Oppression or Merve Emre’s The Personality Brokers. There were gifts—like Neta Bomani’s film Dark matter objects: Technologies of capture and things that can’t be held, with the citations we needed. I learned the most out of centering critical theory/pop sensation Mandy Harris Williams (@idealblackfemale on Instagram) on the feed.

I looked, in short, for the wry minds and voices in criticism, in media studies, in computational theory, in activism, and who also flow easily between these spaces and more, who have been thinking about these issues for a long time. A few had quieted down. Others had never really stopped tracking the patterns or decoding mystification in language. For years in the pages of HOLO, writers and artists have been investigating issues that have only built in intensity and spread: surveillance creep, digital mediation, networked infrastructure, and the necessary, ongoing debunking of technological neutrality.

Nora’s picks: Wendy Hui Kyong Chun Updating to Remain the Same (2016), Jackie Wang Carceral Capitalism (2018), Simone Browne Dark Study (2015), “theory/pop sensation” @idealblackfemale on Instagram

Neta Bomani’s Dark matter objects, a film about “technologies of capture and things that can’t be held.”

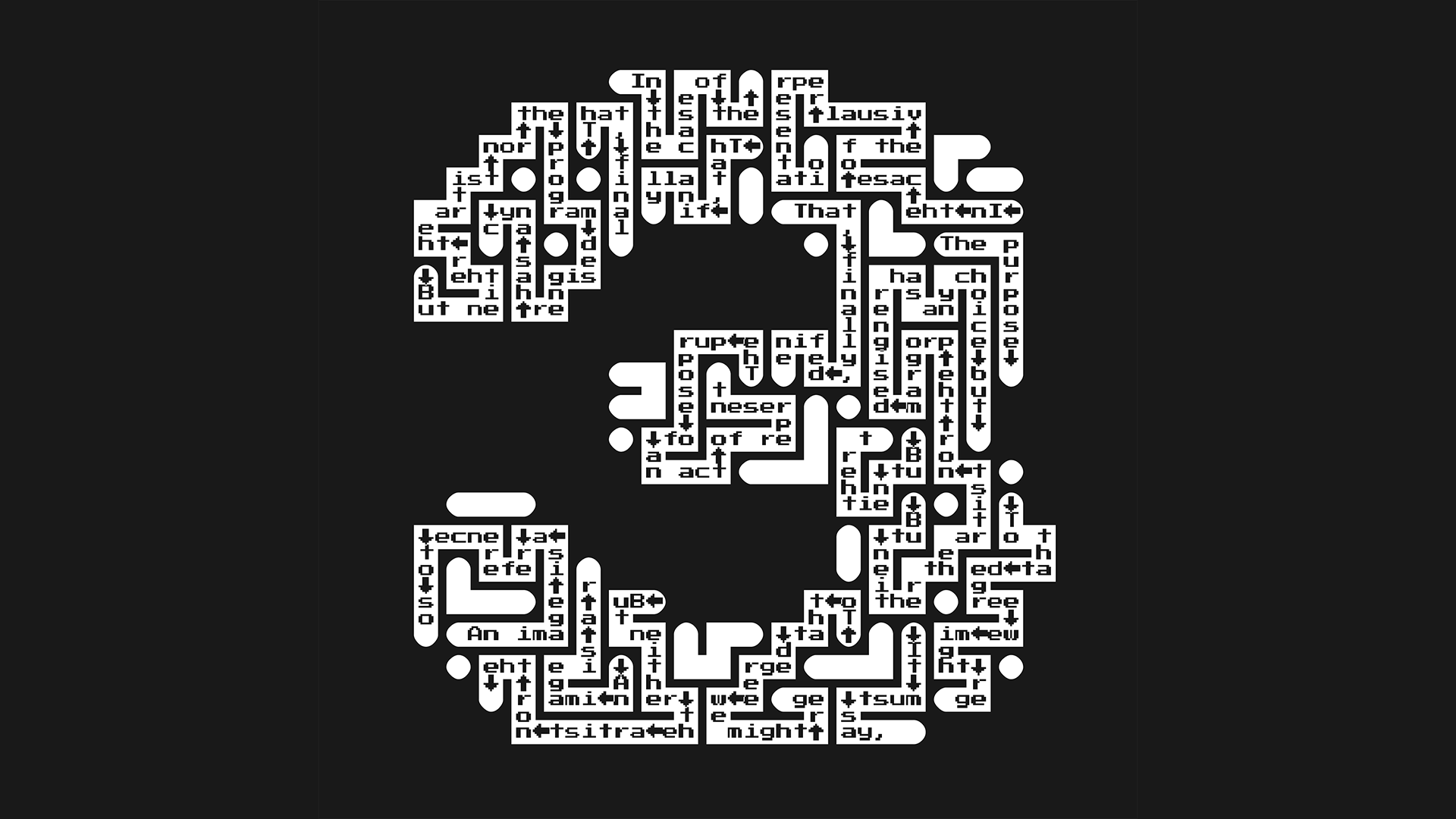

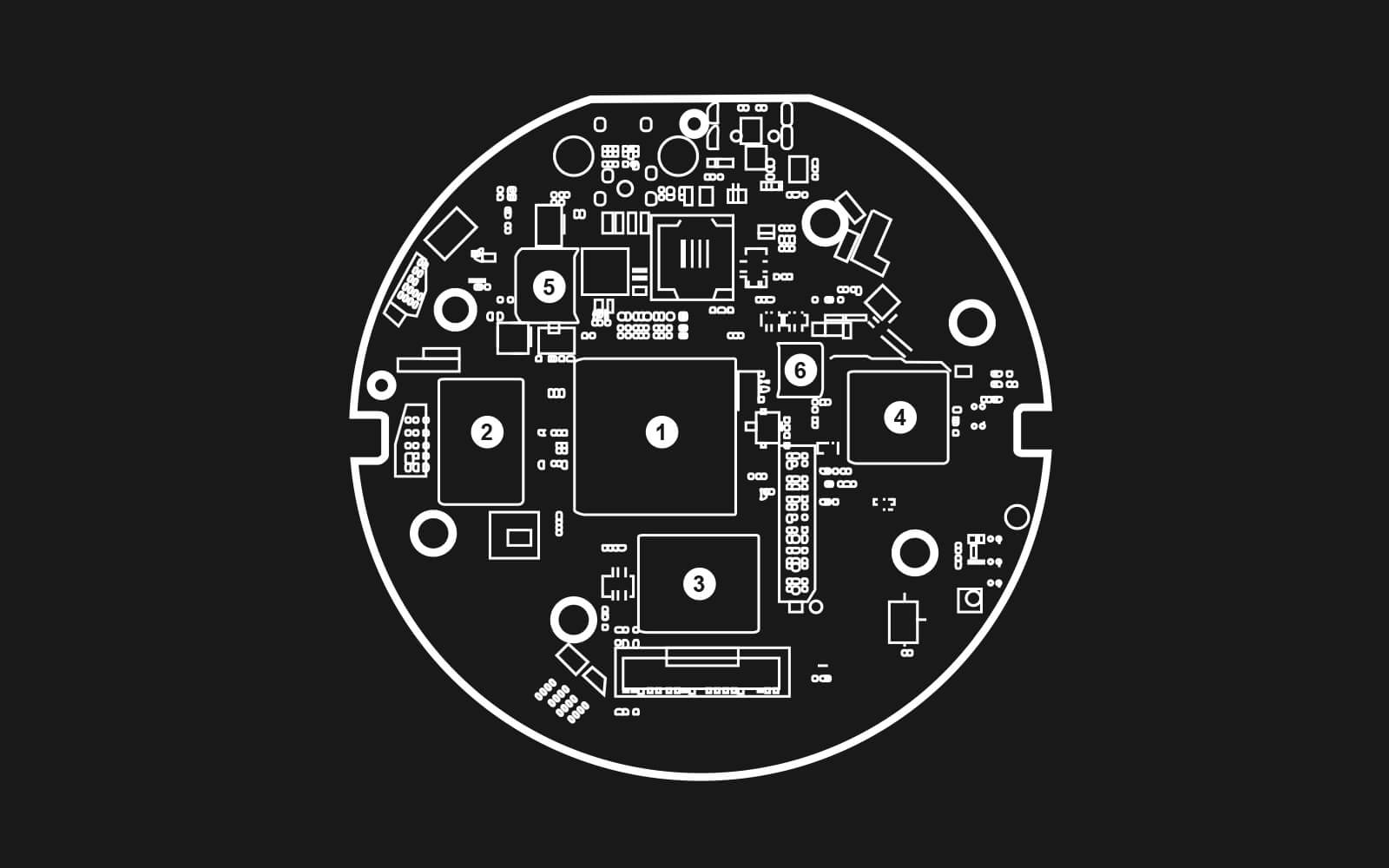

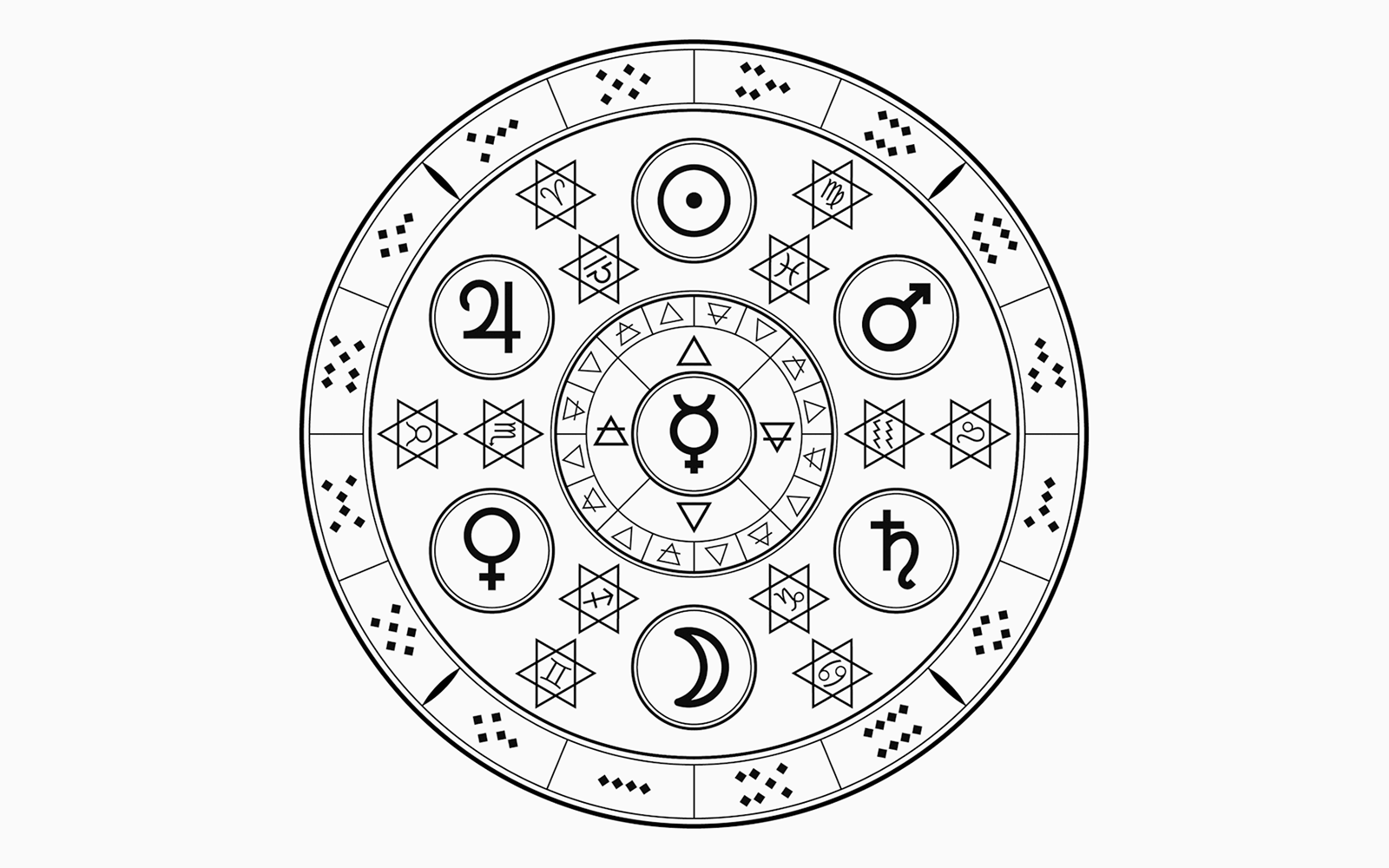

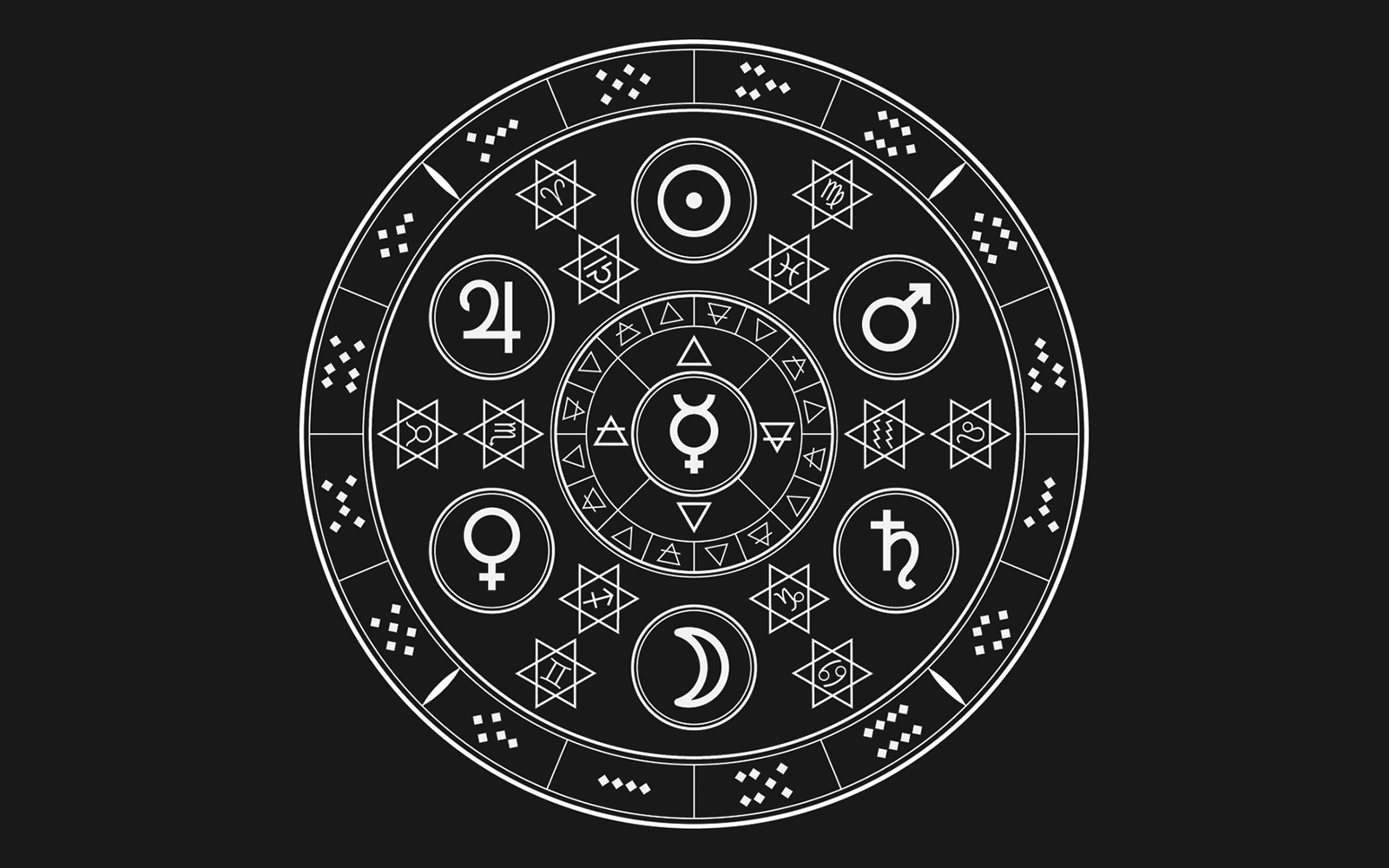

We started this letter with noting patterns. Language patterns are the root of this Annual’s editorial frame. The language of obfuscation and mysticism, in which technological developments are framed as remote, or mystical, as partially-known, as just beyond the scope of human agency—crops up in criticism and discourse. One hears of a priestlike class in ownership of all information. Of systems that their creator-engineers barely understand. Of a present and near-technological future that is so inscrutable (and monumental) that we can only speak of the systems we build as like another being, with its own seeming growing consciousness. A kind of divinity, a kind of magic.

Right alongside this mythic language is also the language of legibility and technology’s explainability. A thing we make, and so, a thing we know. These languages are often side by side in the media(s) flooding us. Behind the curtain is the little man, the wizard, writing spells, in a new language. Legions of artists have worked critically with AI, working to enhance and diversify and complicate the Datasets. We’ve read about the diligent work of unpacking the black boxes of artificial intelligence, of politicians demanding an ethical review, to ‘make legible’ the internal processes at play. Is legibility enough? Legibility has its punishments, too. In 2020, Timnit Gebru, Google’s former Senior Research Scientist, asked for the company to be held accountable to its AI Ethics governance board. This resulted in Gebru’s widely publicized firing, and harassment across the internet. The horror Gebru experienced prompted critical speculation and derision about AI Ethics, a refinement in service of extraction and capitalism. It’s almost like … the problems are systemic, replicated, trackable, and mappable across decades and centuries.

As we are trawled within these wildly enmeshed algorithmic nets, how do we draw on these patterns, of mystification and predictive capture, to see the next ship approaching? To imagine alternatives, if escape is not an option? What stories and myths do we need to tell about technology now, to foretell models we need and can agree on wanting to live through and be interpreted by?

“Language patterns are the root of this Annual’s editorial frame. The language of obfuscation and mysticism, in which technological developments are framed as remote, or mystical, as partially-known, as just beyond the scope of human agency.”

“How do we draw on these patterns, if escape is not an option? What stories and myths do we need to tell about technology now, to foretell models we can agree on wanting to live through and be interpreted by?”

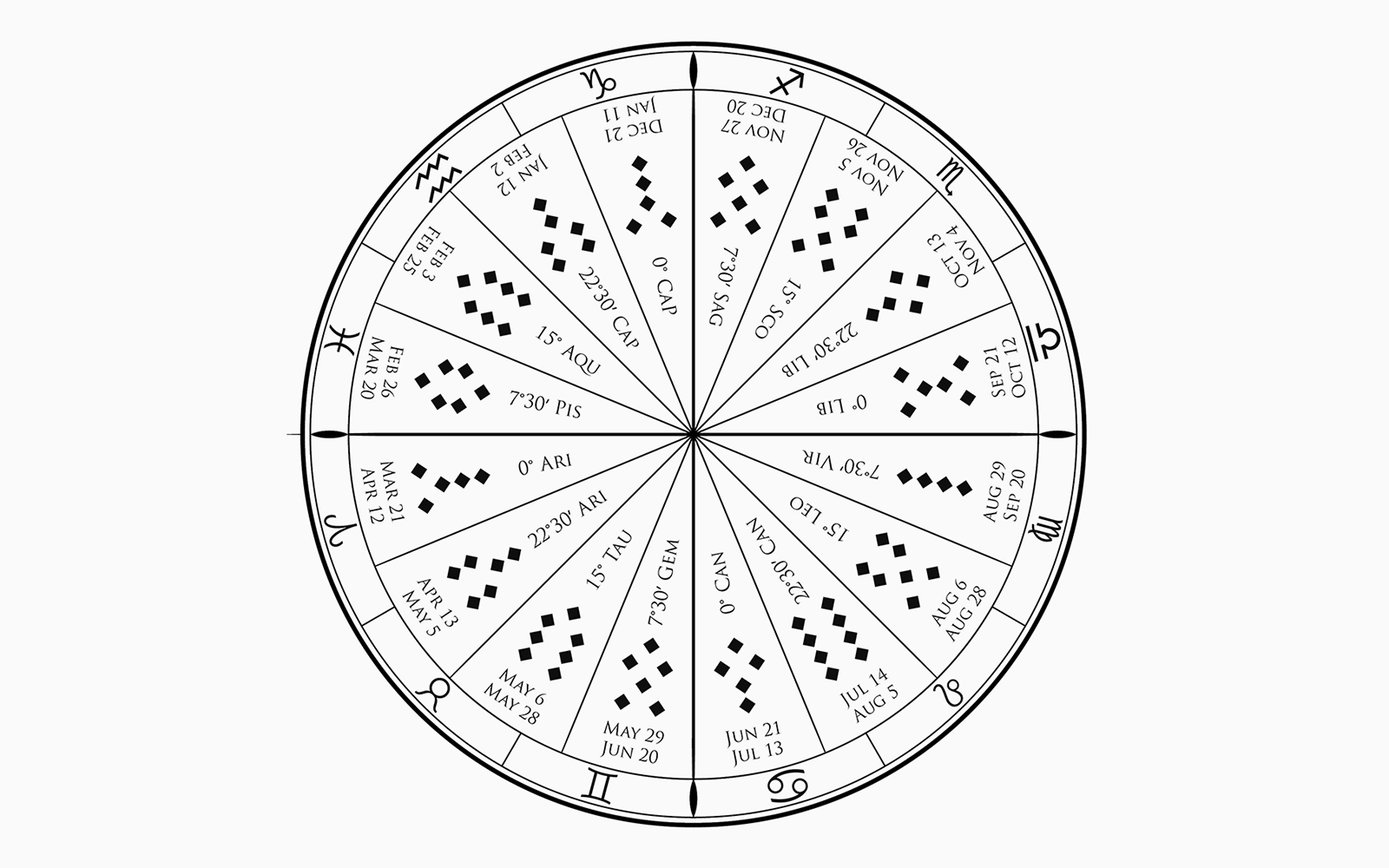

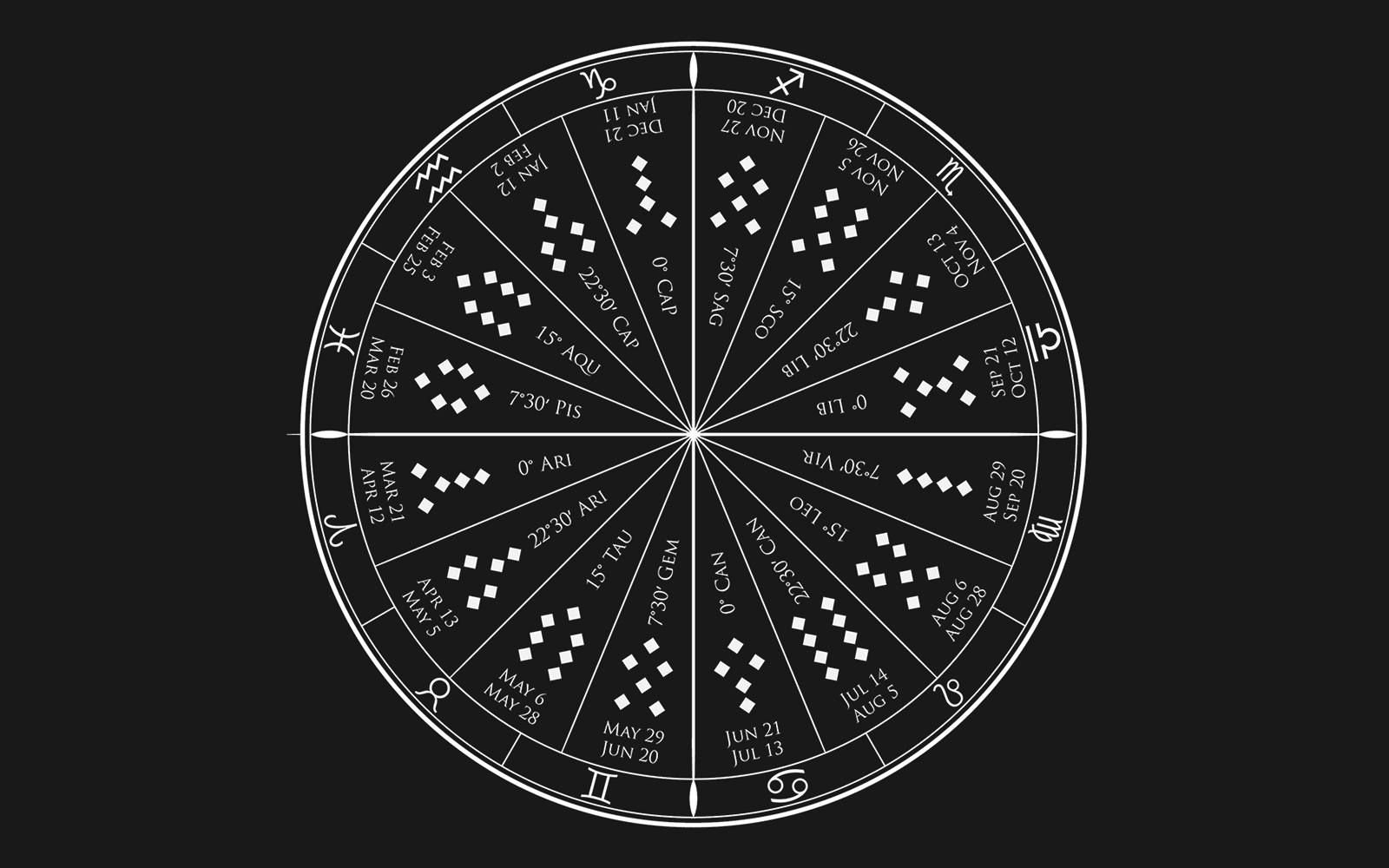

Diagrams: cast handfuls of soil, rocks, or sand are cross-referenced with sixteen figures to divine the future in geomancy

To start to answer this question, over the past month, we have asked contributors to respond to one of four prompts, themed Explainability and Its Discontents; Enchantment, Mystification, and Partial Ways of Knowing; Myths of Prediction Over Time; and Mapping and Drawing Outside of Language. They have been asked to think about models of explainability, diverse storytelling that translates the epistemology of AI, and myths about algorithmic knowledge, in order to half-know, three-quarters know, maybe, the systems being built, refined, optimized. They’ve been asked to consider speculative and blurry logic, predictive flattening, and the cultural stories we tell about technology, and art, and the space made by the two. Finally, to close the issue, a few have been asked to think about ways of knowing and thinking outside of language, in search of methods of mapping, pattern recognition, and artistic research that will help us in the future.

We can’t do this thinking and questioning alone. In the last weeks, we’ve gathered a first constellation of forceful responses—and the momentum is building. We are thrilled to begin to map these spaces of partial and more-than-partial knowing, and see what emerges, along with you all.